We find ourselves at a pivotal moment in philanthropy: The choices we make about emerging technologies like artificial intelligence (AI) right now could have lasting impacts on the communities we serve.

At the Annenberg Foundation, we are exploring AI’s potential not just as a tool for efficiency, but as a means to advance our mission in a way that’s aligned with our values. We were led by a curiosity and desire to prepare our own organizations for AI use and its impact as well as a desire to then determine if we could share our learnings with our other valued colleagues in the sector.

Grounding AI in Values and Operational Excellence

Our exploration of AI over the past months has been rooted in a simple belief: Before we could begin to help or even lead, we needed to listen and learn.

To that end, the Foundation began by working with one of the co-authors of this piece (Chantal), the former executive director of the Technology Association of Grantmakers and co-author of the “Responsible AI Framework for Philanthropy.” Then, together we made three foundational moves to better understand how to start incorporating AI into our work:

- Listening Sessions: Rather than ask our staff, “how do you want to use AI?” we explored questions like, “What are the current pain points in your work?” and “How would you like to be more effective?” This approach allowed us to catalog potential AI use cases that could streamline our work.

- Staff Survey: We conducted a pulse check survey to gauge staff awareness, concerns, and aspirations in an anonymous fashion. Key findings included the fact that a significant portion of staff (63 percent) are already experimenting with AI tools like CoPilot and ChatGPT, primarily in a rudimentary fashion.

- Values Mapping: Using an interactive Mural board, we held an “AI Pop-Up Breakfast” for staff to translate the concepts of responsible AI into specific practices for our organization. For example, Staff felt strongly that equity in the realm of AI meant that all staff have access to new tools and the training they need to be successful. Staff shared aspirations for AI such as:

- “AI needs are weighted equitably across departments to right-size needs.”

- “Everyone has access to all tools no matter your job.”

- “We have the ability to preserve our unique voice as a foundation.”

- “Make AI lingo and tools comprehendible to the average person.”

- “We are making our grantee partners aware that the Foundation is utilizing AI tools.”

Building an AI Governance Framework

The research above was instrumental in forming our approach to AI governance at the Foundation.

Like several peer foundations, we have chosen to govern AI within the context of data at our organization by creating a Data Use Policy that also incorporates AI governance. Developing our policy involved classifying our data into sensitivity categories. It also incorporated the values identified by staff during our values mapping exercise shown above.

The resulting Data Usage Policy is more than just a set of guidelines; it’s a living document that reflects how we as a foundation hold ourselves responsible for safeguarding the data of our nonprofit partners and our institution when using all forms of technology, including both predictive and generative AI.

If you’re just getting started yourself, we recommend creating an AI (or Data) Usage Policy that identifies, at a minimum, three things:

- Classification of data as public, non-sensitive, and sensitive/confidential

- Approved AI tools for each data type

- Responsibilities of the AI user to check accuracy, anonymize input data, and disclose usage

Collaborating with Our Nonprofit Partners

Beyond our organization, we recognized early that our nonprofit partners also seek to streamline and innovate with advances in generative AI. However, rather than immediately offering AI grants or training, we again chose to listen first, a decision that unearthed important differences between foundation staff and grantees.

In surveying our grantees, we used several of the same questions asked in our staff survey noted above. For example, we asked:

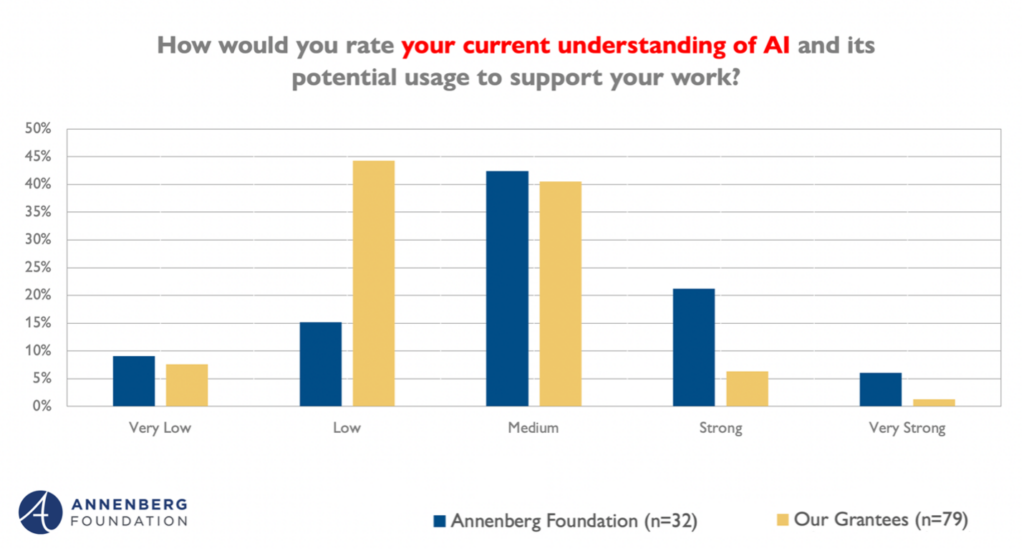

- How would you rate your current understanding of AI and its potential usage to support your work?

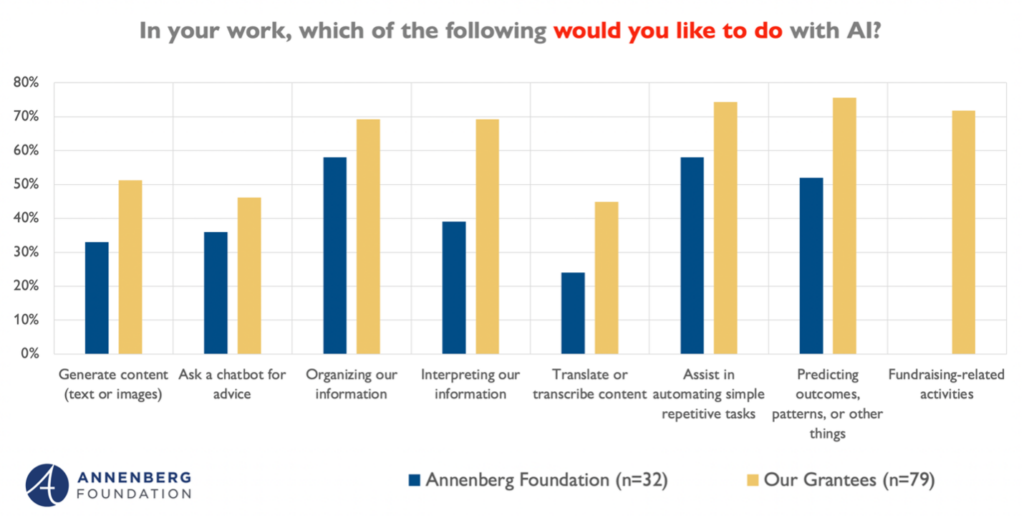

- In your work, which of the following would you like to do with AI?

Interestingly, while foundation staff reported a higher degree of AI understanding than nonprofits, they exhibited less urgency in identifying future applications of AI. Nonprofits, in contrast, see a more immediate and practical need for AI in nearly every area of potential application, especially fundraising.

This difference in perspective has been a crucial reminder for us of the privilege that philanthropy holds in being less vulnerable to the pressures of efficiency and competition for resources.

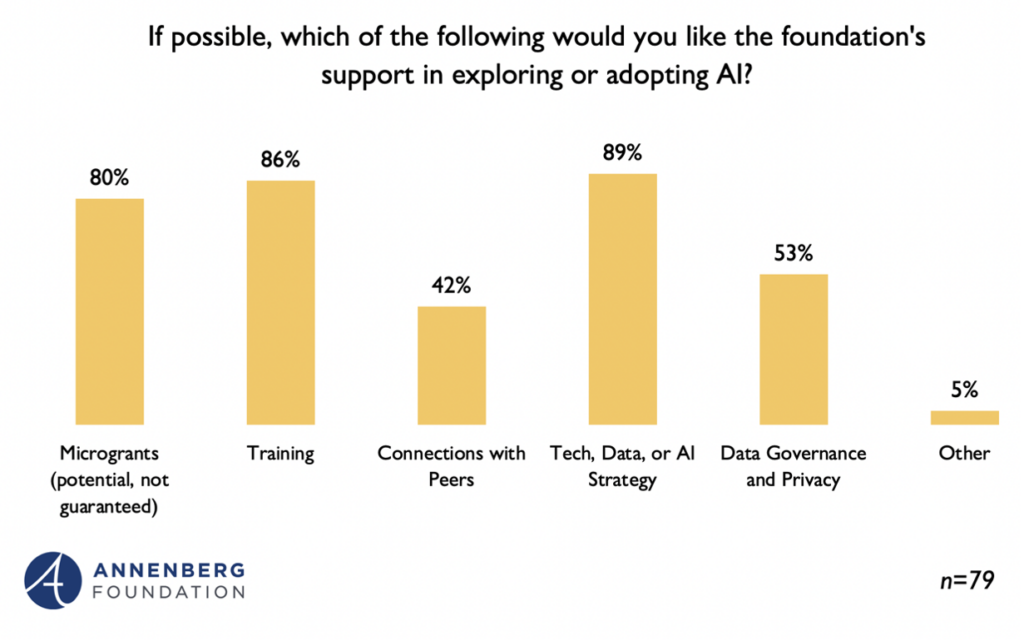

Additional survey questions revealed that, rather than a simple grant for AI experimentation, our nonprofits seem to prefer a combination of funding alongside skill-building, and support for strategy development.

Given these results, we’ve begun tailoring our AI grantmaking efforts to better align with the practical needs of our grantees, exploring how AI can be used to automate repetitive tasks and enhance information processing within the wider context of technology strategy and skill-building.

Looking Ahead: Sharing Our Lessons and Continuing the Conversation

Looking ahead, we’re excited to launch our AI pilot projects. Currently, we have provided licenses to ChatGPT Teams for all staff with two additional pilots under review by our Data Governance Committee:

- Image and Document Repository Classification and Search: A significant pain point for staff across multiple departments that could be addressed by off-the-shelf AI solutions.

- HelpDesk GPT and Onboarding GPT: Internal-facing customized chatbots to streamline employee assistance and onboarding.

As we move forward, we’re committed to sharing the lessons we’ve learned — both the successes and the challenges. We believe that by being open about our journey, we can help foster a culture of collaborative learning in the philanthropic sector.

Navigating AI should not be a solitary activity. Earlier this summer, we hosted an AI Peer Exchange with the Technology Association of Grantmakers (TAG), where we discussed the responsible implementation of AI alongside the Gates Foundation, Kalamazoo Community Foundation, and the Rockefeller Archive Center. Over the past several months alone, we’ve shared and collaborated with nearly 400 foundations at AI-related events with the Communications Network, the Council of Michigan Foundations, and the Council on Foundations and more.

Our hope is that through exchange and peer learning, we can navigate the complexities of AI together and ensure that emerging technology is used to benefit the communities we serve. We hope you’ll join us.

Cinny Kennard is executive director of the Annenberg Foundation. Find her on LinkedIn. Chantal Forster is AI strategy resident at the Annenberg Foundation. Find her on LinkedIn.

Editor’s Note: CEP publishes a range of perspectives. The views expressed here are those of the authors, not necessarily those of CEP.

👇Follow more 👇

👉 bdphone.com

👉 ultraactivation.com

👉 trainingreferral.com

👉 shaplafood.com

👉 bangladeshi.help

👉 www.forexdhaka.com

👉 uncommunication.com

👉 ultra-sim.com

👉 forexdhaka.com

👉 ultrafxfund.com

👉 ultractivation.com

👉 bdphoneonline.com